In the first two parts of our predictive maintenance blog series, we explored the basics, challenges, use cases and key steps for preparing and analyzing data. With this foundation in place, we now turn to one of the most critical aspects of predictive maintenance: predicting and preventing mechanical failures before they happen. In this blog entry, we will talk you through the key steps for selecting the right model, training it successfully and deploying predictive maintenance solutions.

Challenges and Strategies for Model Training

Industrial systems typically operate for long periods without disruption. Because deviations from normal behavior are rare, they are classified as anomalies. The goal of anomaly detection training is for sensors to recognize patterns so unusual patterns can be immediately identified. If sufficient labeled historical data is available, it can be distributed to training, test and validation datasets once the data has been sufficiently prepared. While traditional machine learning models use the full dataset, advanced approaches, such as the variational autoencoder (VAE) deliver impressive results by solely using error-free states from data to train patterns. The VAE is a type of neural network and calculates a probability or anomaly score for each given data point; this measures how well the data point matches the learned normal patterns. If the probability is too low, then the data point deviates significantly enough from the learned structures and an error or anomaly is suggested.

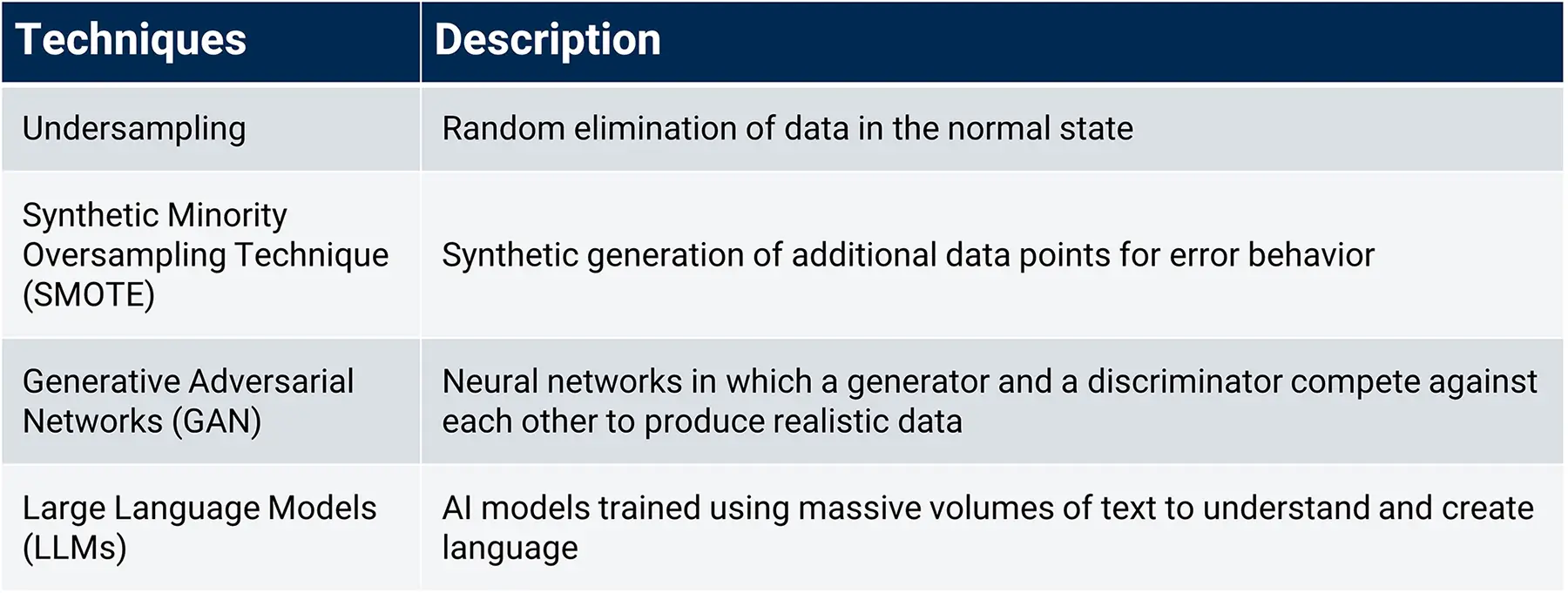

Models such as the VAE do not require error data; this is advantageous as there is only limited availability of this data in many applications. This lack of error data often leads to imbalanced datasets; this is a main problem when it comes to predictive maintenance. The table below provides an overview of various strategies that can help you compensate for this data imbalance.

There are various methods you can use to recognize and map rare error patterns. Techniques like undersampling or SMOTE help rebalance the classes in the dataset. Alternative approaches, such as using generative AI (e.g. GANs or LLMs) can create additional data points for defects. What is important is that there is no universal solution. Try out different approaches to find the best fit for your use case.

How to Find the Right Model for You

There is no “one size fits all” algorithm for predictive maintenance. As with most machine-learning (ML), the best model depends on the nature of your available data and the specific requirements of your use case. We covered the basic methods of data preparation in part two of this blog series. The next step is to test, evaluate and compare different models. This iterative process can be supported by tools such as MLflow. The open source platform systematically documents experiments, including parameters, model versions and results. This allows for greater transparency and traceability within the entire development process.

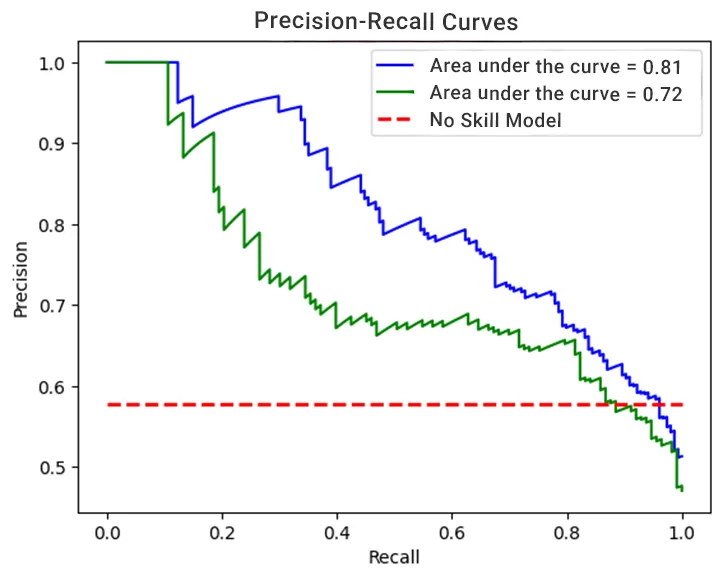

F1 score and precision recall curves are among the most commonly-used model evaluation metrics as they are particularly suited to imbalanced datasets. They help you relate two central aspects of a model. Weighting may vary depending on the use case. Some use cases concentrate on detecting every possible fault early. This may lead of an increase in false positive predictions and unnecessary maintenance or component change. If this exchange is linked with high costs or considerable effort, then it might make more sense to maximize component runtime and accept a slightly higher risk of unexpected failure. Precision recall curves can train the model to either minimize false positives or false negatives. Since models can’t typically optimize both precision and recall, a compromise is often necessary. Precision describes the number of correct positive predictions for all cases classified as positive. Recall, on the other hand, shows the relationship of the correct positive results for all actual positive cases. The F1 score represents the weighted average of precision and recall. A precision recall curve can be created for various algorithms or various parameters for a single model by plotting the respective precision and recall values for all possible thresholds.

The area under the precision recall curve reflects model performance for certain parameters. It enables comparison with a so-called “no-skill” model, whose predictions are merely proportional to the number of positive cases in the dataset. A perfect model would achieve a maximum value of 1 for both precision and recall; this would correspond to an area of 1 below the curve. The smaller the area, the worse the model performance. The graphic above shows that the blue curve encompasses a larger area than the green curve, indicating better results.

What Really Matters When Choosing the Right Model

Beyond classic evaluation metrics like precision or recall, several other factors play a decisive role in selecting and training a suitable model — such as computing time, model size and customizability. Even excellent results are of little use if they are too slow for real-time applications. As more solutions move to (or closer to) edge devices, large models quickly hit their limits due to limited storage and computing capacity. In many cases, smaller models that still deliver reliable results are often the better choice. Another important aspect is customizability. Models that can be easily retrained offer a major advantage where fast reactions are required.

This is closely tied to the concept of continuous learning. Predictive maintenance models require constant monitoring and frequent retraining to take changes to the data into consideration. Depending on the approach, tools like AutoKeras, AutoSkiKitLearn and FeatureTools can support sustainable model management. A key element of this strategy is defining values that govern how often models are retrained and under what circumstances they should be replaced. Instead of relying on one single, complex model, combining multiple simple models can be a powerful alternative. This approach, known as ensemble learning, runs several algorithms in parallel, reducing dependency on a single model if e.g. a model needs to be retrained. In many cases, the models can even compensate for one another’s errors.

From Model Selection to Roll-Out

Predictions are only useful if they trigger actionable steps — allowing quick, precise and proactive reactions. When the system detects an imminent failure, it’s essential to immediately notify the right people and communicate the next steps which need to be taken. This is where repair plans come into play. These predefined plans can be stored in a database and automatically sent to the relevant teams when needed. Generative AI can also support quicker reaction to diagnosed failures; predefined prompts can help automated creation of a suitable repair plan.

To ensure these measures are triggered and executed reliably, you need a robust technical infrastructure. A well-designed architecture and sustainable pipelines enable the stable deployment of models in production environments. They regulate and automate critical steps such as raw data processing, feature engineering, result preparation, model monitoring and continuous learning. While conventional ML pipelines integrate seamlessly with cloud environments, predictive maintenance often requires edge-ready solutions, especially when machines lack permanent connectivity or have low latency times.

Conclusion

Transitioning from model development to productive use requires a clear, structured approach. From selection and training, right through to operational implementation, every step can be planned strategically to build a solution that fits your specific use case. We look forward to helping you implement predictive maintenance successfully within your organization.

Already have a Project in Mind?

Explore our comprehensive data analytics portfolio and connect with our experts: no strings attached! Together, we’ll turn your project into a success.